The CIO through to the Data Center Manager will need to guarantee that their infrastructure is capable of supporting future AI needs

It’s already clear that the AI revolution will need new network architectures, new networking technologies and a new approach to infrastructure cabling design, one that emphasizes new product innovation and faster installation.

AI needs access to more capacity at higher speeds and those needs are only going to grow more acute. It doesn’t matter if the AI cloud is on-premise or off-premise; the industry needs to be ready to meet those needs.

As recently as 2017, many conversations with cloud data center operators revolved around data rates (think 100G) that today would be considered to be “limited.” At that time, the optics supply chains were either still immature or the technology was proving too expensive to go beyond that rate.

Up to that point, the internet was rich in media content—photographs, movies, podcasts and music, plus a few new business applications., Data storage and transmission capabilities were still relatively limited. Well, limited with respect to what we see today.

It’s estimated that in 2017, 1.8 million Snaps on Snapchat were created every minute; by 2023, that figure is reported to have increased by 194,344%, or 3.5 billion Snapchats every minute.

We also now see IT technology that is able to interrogate all the 1’s and 0’s used to make those images and sounds, and in the blink of an eye, answer a complex query, make actionable decisions, detect fraud or even interpret patterns that may necessitate future social and economic change at a national level. These previously human responsibilities are now possible to achieve instantly using AI.

Both on-prem and off-prem AI cloud infrastructure must expand to support the huge amount of data generated by the new payload overhead created by AI adoption for these functions.

CommScope has been working to provide infrastructure solutions in the areas of iterative and generative AI (GenAI) for years, supporting many of the global players in the cloud and internet industry.

For some time, we’ve taken an innovative approach to infrastructure that sets sights firmly on what’s coming over the horizon, beyond the short term. We build solutions not only to solve coming challenges, but to solve those challenges customers don’t even see coming yet.

A good example of this thinking is new connectivity. We thought long and hard about how the networking industry will respond to demand for higher data rates, and just how the electrical paths and silicon inside the next generation of switches will likely shape the future of optical connectivity. The genesis of these conversations was the MPO16 optical fiber connector, which CommScope was among the first to bring to market in an end-to-end structured cabling solution. This connector ensures that the current IEEE roadmap of higher data rates can be satisfied, including at 400G, 800G and 1.6T, all essential technologies for AI cloud.

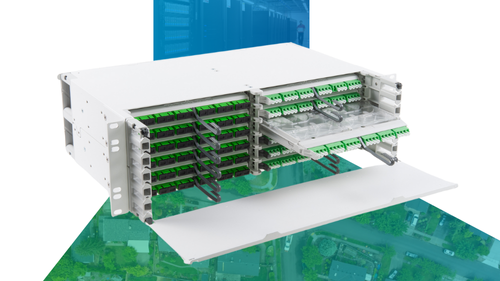

We’ve also developed solutions that are quick to install, an advantage as highly prized as the connector technology itself. Having the ability to pull high fiber-count factory terminated cable assemblies through a conduit can significantly reduce build time for AI cloud deployments, while ensuring factory-level optical performance over multiple channels. CommScope supplies assemblies to the industry that can provide 1,728 fibers, all pre-terminated onto MPO connectors in our controlled factory environment. This enables AI cloud providers to connect multiple front-end and back-end switches and servers together quickly.

To that point, we see an AI cloud arms race, not just at the big players, but also for those who might have previously been labelled as “tier 2” or “tier 3” cloud companies just a short while ago. These companies measure their success on building and spinning up AI cloud infrastructures rapidly to provide GPU access to their customers, and just as importantly, beating competitors off the starting line.

The (Quickly Approaching) Future

In the new world of AI cloud, all data is required to be read and re-read; it’s not just the latest batch of new data to land at the server that must be prioritized. To achieve payback on a trained model, all data (old and new) must be kept in a constant state of high accessibility so that it can be served up for training and retraining quickly.

This means that GPU servers require nearly instantaneous direct access to all the other GPU-enabled servers on the network to work efficiently. The old method of approaching network design, one of, “build now and think about extending later,” won’t work in the world of AI cloud. Today’s architectures must be built with the future in mind, i.e., the parallel processing of huge amounts of often diverse data. Designing a network that is built to support the access needs of a GPU server demands first, will ensure the best payback for the sunk CapEx costs and ongoing OpEx required to power these devices.

In a short time, AI has taken the cloud data center from the “propeller era” and rocketed it into to a new hypersonic jet age. I think we’re going to need a different airplane.

CommScope can help you better understand and navigate the AI landscape. Start by downloading our new guide, Data Center Cabling Solutions for NVIDIA AI Networks https://www.commscope.com/globalassets/digizuite/1008695-ai-ordering-guide-co-119128-en.pdf