The volume of digital traffic pouring into the data center continues to climb; meanwhile, a new generation of applications driven by advancements like 5G, AI and machine-to-machine communications is driving latency requirements into the single millisecond range. These and other trends are converging in the data center’s infrastructure, forcing network managers to rethink how they can stay a step ahead of the changes.

The volume of digital traffic pouring into the data center continues to climb; meanwhile, a new generation of applications driven by advancements like 5G, AI and machine-to-machine communications is driving latency requirements into the single millisecond range. These and other trends are converging in the data center’s infrastructure, forcing network managers to rethink how they can stay a step ahead of the changes.

Traditionally, networks have had four main levers with which to meet increasing demands for lower latency and increased traffic.

- Reduce signal loss in the link

- Shorten the link distance

- Accelerate the signal speed

- Increase the size of the pipe

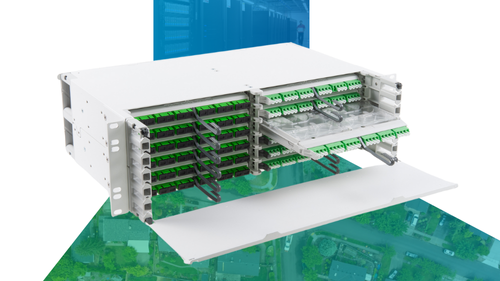

While data centers are using all four approaches at some level, the focus—especially at the hyperscale level—is now on increasing the amount of fiber. Historically, the core network cabling contained 24, 72, 144 or 288 fibers. At these levels, data centers could manageably run discrete fibers between the backbone and switches or servers, then use cable assemblies to break them out for efficient installation. Today, fiber cables are deployed with as many as 20 times more fiber strands — in the range of 1,728-, 3,456- or 6,912 fibers per cable.

CLICK TO TWEET: CommScope’s Jason Bautista and Ken Hall explain how you can adapt to higher fiber counts in your data center.

Higher fiber count combined with compact cable construction is especially useful when interconnecting data centers (DCI). DCI trunk cabling with 3,000+ fibers is common for connecting two hyperscale facilities, and operators are planning to double that design capacity in the near future. Inside the data center, problem areas include backbone trunk cables that run between high-end core switches or from meet-me rooms to cabinet-row spine switches.

Whether the data center configuration calls for point-to-point or switch-to-switch connections, the increasing fiber counts create major challenges for data centers in terms of delivering the higher bandwidth and capacity where it is needed.

The massive amount of fiber creates two big challenges for the data center. The first is, how do deploy it in the fastest, most efficient way: how do you put it on the spool, how do you take it off of the spool, how do you run it between points and through pathways? Once it’s installed, the second challenge becomes, how do you break it out and manage it at the switches and server racks?

Rollable ribbon fiber cabling

The progression of fiber and optical design has been a continual response to the need for faster, bigger data pipes. As those needs intensify, the ways in which fiber is designed and packaged within the cable have evolved, allowing data centers to increase the number of fibers without necessarily increasing the cabling footprint. Rollable ribbon fiber cabling is one of the more recent links in this chain of innovation.

Rollable ribbon fiber is bonded at intermittent points.

Source: ISE Magazine

Rollable ribbon fiber cable is based, in part, on the earlier development of the central tube ribbon cable. Introduced in the mid-1990s, primarily for OSP networks, the central tube ribbon cable featured ribbon stacks of up to 864 fibers within a single, central buffer tube. The fibers are grouped and continuously bonded down the length of the cable which increases its rigidity. While this has little affect when deploying the cable in an OSP application in a data center a rigid cable is undesirable because of the limited routing restrictions these cables require.

In the rollable ribbon fiber cable, the fibers are attached intermittently to form a loose web. This configuration makes the ribbon more flexible, allowing manufacturers to load as many as 3,456 fibers into one two-inch duct, twice the density of conventionally packed fibers. This construction reduces the bend radius making these cables easier to work with inside the tighter confines of the data center.

Inside the cable, the intermittently bonded fibers take on the physical characteristics of loose fibers which easily flex and bend making it easier to manage in tight spaces. In addition, rollable ribbon fiber cabling uses a completely gel-free design which helps reduce the time required to prepare for splicing, therefore reducing labor costs. The intermittent bonding still maintains the fiber alignment required for typical mass fusion ribbon splicing.

Reducing cable diameters

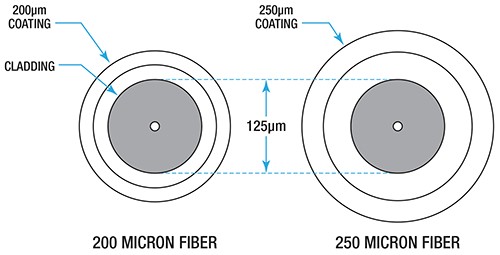

For decades, nearly all telecom optical fiber has had a nominal coating diameter of 250-microns. With growing demand for smaller cables, that has started to change. Many cable designs have reached practical limits for diameter reduction with standard fiber. But a smaller fiber allows additional reductions. Fibers with 200-micron coatings are now being used in rollable ribbon fiber and micro-duct cable.

For optical performance and splice compatibility, 200-micron fiber features the same 125-micron core/cladding as the 250-micron alternative.

Source: ISE Magazine

It is important to emphasize that the buffer coating is the only part of the fiber that has been altered. 200-micron fibers retain the 125-micron core/cladding diameter of conventional fibers for compatibility in splicing operations. Once the buffer coating has been stripped, the splice procedure for 200-micron fiber is the same as for its 250-micron counterpart.

New chipsets are further complicating the challenge

All servers within a row are provisioned to support a given connection speed. But in today’s hyper-converged fabric networks it is extremely rare that all server in a row will need to run at their max line rate at the same time. The difference between the server’s upstream bandwidth required and the downstream capacity that’s been provisioned is known as the oversubscription, or contention ratio. In some areas of the network, such as the inter-switch link (ISL), the oversubscription ratio can be as high as 7:1 or 10:1. A higher ratio is chosen to reduce switch costs, but the chance of network congestion increases with these designs.

Oversubscription becomes more important when building large server networks. As switch to switch bandwidth capacity increases, switch connections decrease. This requires multiple layers of leaf-spine networks to be combined to reach the number of server connections required. Each switch layer adds cost, power and latency however. Switching technology has been focused on this issue driving a rapid evolution in merchant silicon switching ASICs. On December 9, 2019, Broadcom Inc. began shipping the latest StrataXGS Tomahawk 4 switch, enabling 25.6 Terabits/sec of Ethernet switching capacity in a single ASIC. This comes less than two years after the introduction of the Tomahawk 3 which clocked in at 12.8Tbps per device.

These ASICs have not only increased lane speed, they have also increased the number of ports they contain. Data centers can keep the oversubscription ratio in check. A switch built with a single TH3 ASICs supports 32 400G ports. Each port can be broken down to eight 50GE ports for server attachment. Ports can be grouped to form 100G, 200G or 400G connections. Each switch port may migrate between 1-pair, 2-pair, 4-pairs or 8-pairs of fibers within the same amount of QSFP footprint.

While this seems complicated it is very useful to help eliminate oversubscription. These new switches can now connect up to 192 servers while still maintaining 3:1 contention ratios and eight 400G ports for leaf-spine connectivity! This switch can now replace six previous-generation switches.

The new TH4 switches will have 32 800Gb ports. ASIC lane speeds have increased to 100G. New electrical and optical specification are being developed to support 100G lanes. The new 100G ecosystem will provide an optimized infrastructure which is more suited to the demands of new workloads like machine learning or AI.

The evolving role of the cable provider

In this dynamic and more complex environment, the role of the cabling supplier is taking on new importance. While fiber cabling may once have been seen as more of a commodity product instead of an engineered solution, that is no longer the case. With so much to know and so much at stake, suppliers have transitioned to technology partners, as important to the data center’s success as the system integrators or designers.

Data center owners and operators are increasingly relying on their cabling partners for their expertise in fiber termination, transceiver performance, splicing and testing equipment, and more. As a result, this increased role requires the cabling partner to develop closer working relationships with those involved in the infrastructure ecosystem as well as the standards bodies.

As the standards surrounding variables such as increased lane speeds multiply and accelerate, the cabling partner is playing a bigger role in enabling the data center’s technology roadmap. Currently, the standards regarding 100GE/400GE and evolving 800Gbs involve a dizzying array of alternatives. Within each option, there are multiple approaches, including duplex, parallel and wavelength division multiplexing – each with a particular optimized application in mind. Cabling infrastructure design should enable all of these alternatives.

All comes down to balance

As fiber counts grow, the amount of available space in the data center will continue to shrink. Look for other components, namely servers and cabinets to deliver more in a smaller footprint as well. Space won’t be the only variable to be maximized. Combining new fiber configurations like rollable ribbon fiber cables with reduced cable sizes and advanced modulation techniques, network managers and their cabling partners have lots of tools at their disposal. They will need them all.

If the rate of technology acceleration is any indication of lies ahead, data centers, especially at the hyperscale cloud level, better strap in. As bandwidth demands and service offerings increase, and latency becomes more critical to the end-user, more fiber will be pushed deeper into the network.

The hyperscale and cloud-based facilities are under increasing pressure to deliver ultra-reliable connectivity for a growing number of users, devices and applications. The ability to deploy and manage ever higher fiber counts is intrinsic to meeting those needs. The goal is to achieve balance by delivering the right number of fibers to the right equipment, while enabling good maintenance and manageability and supporting future growth. So, set your course and have a solid navigator like CommScope on your team.