Many people liken the data center to the “brain” of the corporate network. At this moment, there is a huge new technology project taking place in the European Union with monumental and global implications – The Human Brain Project (HBP). The project is funded to the tune of €1 billion and is expected to take 10 years to complete. Similar to the Human Genome Project, the ambitious objective of the HBP is to develop a full computer simulation of the human brain.

Many people liken the data center to the “brain” of the corporate network. At this moment, there is a huge new technology project taking place in the European Union with monumental and global implications – The Human Brain Project (HBP). The project is funded to the tune of €1 billion and is expected to take 10 years to complete. Similar to the Human Genome Project, the ambitious objective of the HBP is to develop a full computer simulation of the human brain.

Professor Henry Markram, director of the HBP, describes the project as a way to understand what makes the human brain unique, the basic mechanisms behind cognition and behavior, how to objectively diagnose brain diseases and to build new technologies inspired by how the brain computes.

The challenges are many, but one key hurdle is the sheer volume of associated data that already exists and continues to be generated as research scientists around the world evaluate the brain using different techniques and publish their findings in tens of thousands of neuroscience papers annually. Markram confirms that what they currently lack today is a way to bring all of these perspectives together. The process is too complex with just talking and writing papers; there is a need to create a digital, graphical version of the data in order to integrate all this information into one holistic view.

Which brings me back to the data center and how familiar all this sounds to the challenges faced by today’s data center managers. There is a vast amount of data collected from the physical contents and configuration of the data center from multiple sources with varied intentions and formats. This is exacerbated by the “silos” that exist between the IT folks responsible for the servers and other computing assets, and the facilities personnel responsible for power, cooling, and other aspects of the data center. Getting people together face-to-face or sharing disparate reports is inefficient and ineffective.

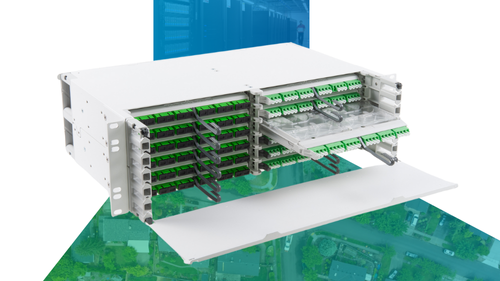

What’s the good news? A “digital, graphical” model of the data center can now be created to integrate all this information into one holistic view, and it doesn’t cost €1 billion. Namely, our iTRACS Data Center Infrastructure Management (DCIM) software suite provides the data center manager with an interactive 3D visualization of the data center and all its interconnections, enabling powerful analytics to drive business efficiency. iTRACS turns vast amounts of operational data about the physical infrastructure – power, space, cooling, network services– into meaningful, understandable information visualized in a navigable model of the data center. This end-to-end model – with all of its dashboards, reports, and analytics – turns guesswork into comprehensive, holistic knowledge that can be shared and leveraged by everyone, collectively.

By integrating all the available data into a powerful simulation, scientists with the HBP project are working to enable a “Big Data” approach to medicine that may lead to personalized drugs based on an individual patient’s symptoms, behaviors and direct measurements. While the optimization goals of a DCIM solution are far less grandiose and altruistic, they are likely no less important to those who face these challenges on a daily basis.

What are your thoughts on DCIM and how it can help manage the “brain” of your business network?